កិច្ចពិភាក្សាអំពីអភិបាលកិច្ចបញ្ញាសិប្បនិម្មិត (AI) ប្រកបដោយក្រមសីលធម៌៖ វាមិនមែនគ្រាន់តែជាការកូដនោះទេ

របាយការណ៍វាយតម្លៃការត្រៀមខ្លួនសម្រាប់បច្ចេកវិទ្យាបញ្ញាសិប្បនិម្មិត (AI) របស់ប្រទេសកម្ពុជា ដែលបានរៀបចំរួចរាល់នៅក្នុងខែកក្កដា ឆ្នាំ២០២៥ បានលើកឡើងដោយផ្ទាល់អំពីការរួមបញ្ចូលយេនឌ័រ ដោយកំណត់អំពីគម្លាតយេនឌ័រដ៏គួរឱ្យកត់សម្គាល់មួយនៅក្នុងវិស័យវិទ្យាសាស្ត្រ បច្ចេកវិទ្យា វិស្វកម្ម និងគណិតវិទ្យា (ស្ទែម) និងកម្លាំងពលកម្មក្នុង AI ជាបញ្ហាប្រឈមចម្បងមួយរបស់ជាត ដោយមានស្ត្រីត្រឹមតែ ១៦,៦៨% ប៉ុណ្ណោះដែលបានបញ្ចប់ការសិក្សាពីស្ទែម បើប្រៀបធៀបទៅនឹងបុរស ៨៣,៣២%។ ដូច្នេះ ការលុបបំបាត់គម្លាតនេះគឺមានសារៈសំខាន់ណាស់ ដើម្បីធានាឱ្យបាននូវភាពយុត្តិធម៌ និងការពារប្រព័ន្ធ AI ពីការបន្ត និងពង្រីកភាពលំអៀងដែលមានស្រាប់នៅក្នុងសង្គម។

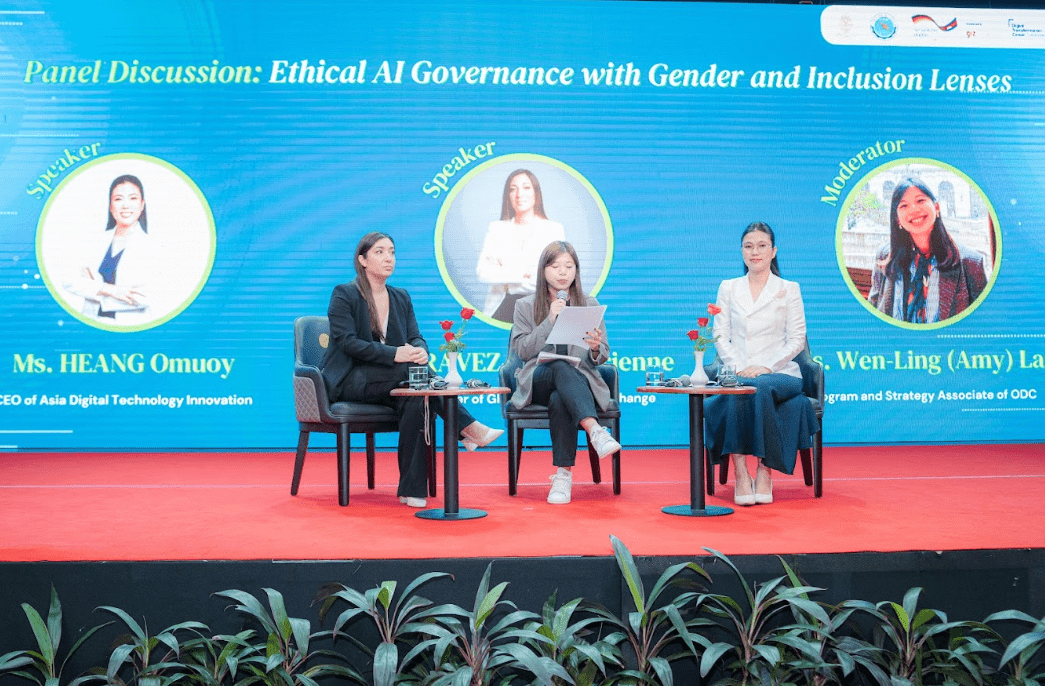

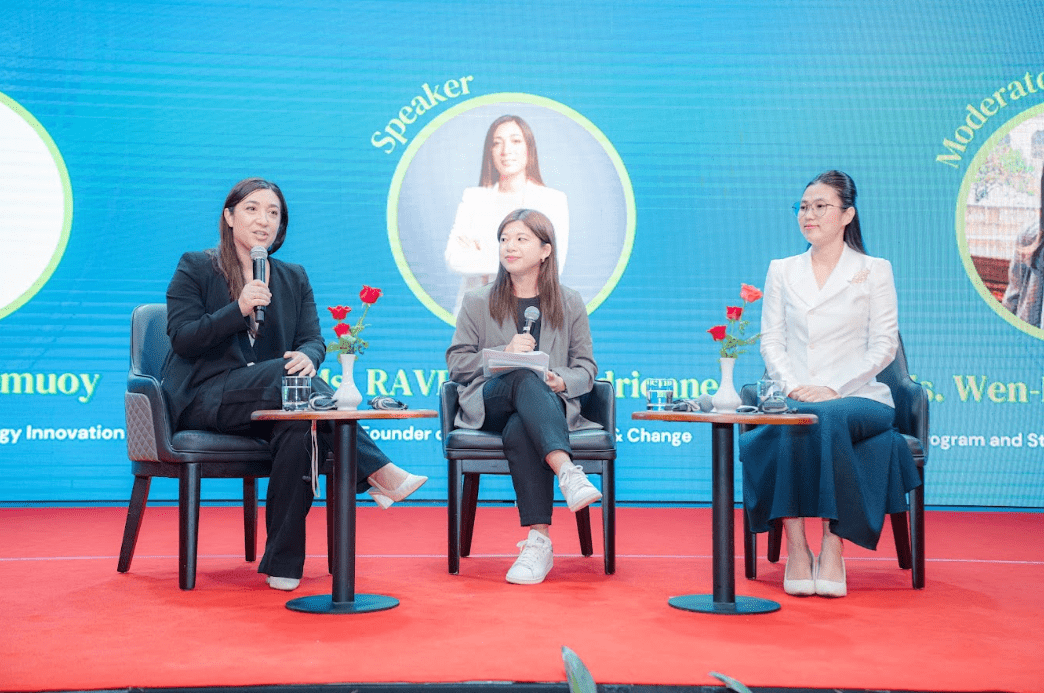

កិច្ចពិភាក្សានេះសម្របសម្រួលដោយកញ្ញា Wen-Ling (Amy) Lai សហការីកម្មវិធី និងយុទ្ធសាស្ត្រនៃអង្គការទិន្នន័យអំពីការអភិវឌ្ឍ (អូឌីស៊ី) ជាមួយនឹងវាគ្មិនគឺ លោកស្រី ហ៊ាង អូមួយ នាយកប្រតិបត្តិនៃក្រុមហ៊ុនបច្ចេកវិទ្យា Asia Digital Technology Innovation (ADITI) និងលោកស្រី Adrienne Ravez-Men សហស្ថាបនិកនៃ Global Innovation & Change បានផ្តល់នូវការសិក្សាស៊ីជម្រៅដ៏សំខាន់មួយទៅលើគោលការណ៍នៃអភិបាលកិច្ច AI ប្រកបដោយសីលធម៌ ដែលត្រូវបានមើលជាពិសេសតាមរយៈតម្រងសំខាន់ៗនៃកញ្ចក់យេនឌ័រ និងបរិយាបន្ន។

ប្រធានបទស្នូលគឺ់ “ដើម្បីឱ្យ AI ក្លាយជាកម្លាំងសម្រាប់ភាពល្អ” ដោយជំរុញទាំងនិរន្តរភាព និងផលប៉ះពាល់វិជ្ជមានសង្គម ពោលគឺការគ្រប់គ្រងរបស់វាត្រូវតបានបង្កើតឡើងប្រកបដោយភាពបុរេសកម្ម មិនមែនយោងតាមតែអ្វីដែលបានកើតឡើងពីមុនមកនោះទេ។ មូលដ្ឋានគ្រឹះនៃក្របខណ្ឌសីលធម៌នេះផ្អែកលើសសរស្តម្ភពីរ៖ តម្លាភាព និងសុវត្ថិភាពខ្ពស់។ គំរូ AI ត្រូវតែបណ្តុះបណ្តាលសម្រាប់តម្លាភាព ពីព្រោះអ្នកប្រើប្រាស់ អ្នកពាក់ព័ន្ធ និងនិយតករត្រូវតែអាចយល់ពីរបៀបដែល AI ឈានដល់ការសម្រេចចិត្តជាក់លាក់មួយ ដោយធានាថាដំណើរការនេះមិនមែនជាប្រអប់ខ្មៅស្រអាប់ (Black Box) នោះទេ។

នៅពេលដែលអនុសាសន៍ ឬការសម្រេចចិត្តរបស់ AI ប៉ះពាល់ដល់ជីវិតរបស់មនុស្ស ដោយរាប់បញ្ចូលចាប់ពីការដាក់ពាក្យសុំប្រាក់កម្ចីរហូតដល់ដំណើរការជ្រើសរើសបុគ្គលិក ភាពច្បាស់លាស់នេះគឺមានសារៈសំខាន់បំផុតសម្រាប់ជំនឿទុកចិត្ត។ រីឯសុវត្ថិភាពខ្ពស់វិញ គឺជាមូលដ្ឋានគ្រឹះនៃការទទួលខុសត្រូវ។ នៅពេលដែលប្រព័ន្ធមានសុវត្ថិភាព ដំណើរការបញ្ចូលនិងប្រើប្រាស់ទិន្នន័យអាចតាមដានបាននូវកំហុសឆ្គង ភាពលំអៀង ឬការប្រើប្រាស់មិនត្រឹមត្រូវ។ យន្តការនៃការបង្កើនទំនួលខុសត្រូវនេះគឺជាកន្លែងដែលគោលការណ៍នៃការរួមបញ្ចូលពិតប្រាកដាចាប់ផ្តើមពង្រឹងមូលដ្ឋានគ្រឹះនៃអភិបាលកិច្ចប្រកបដោយសីលធម៌។ ដោយធានាថា AI ត្រូវបានបង្កើតឡើងនៅលើដំណើរការដែលមានសុវត្ថិភាព អាចតាមដានបាន និងអាចយល់បាន យើងអាចធានាថាការប្រើប្រាស់របស់វាមានភាពយុត្តិធម៌ មានទំនួលខុសត្រូវ និងមានសមត្ថភាពបង្កើតអត្ថប្រយោជន៍សង្គមយូរអង្វែង។

ភ្លាមៗនោះដែល វាគ្មិនបានព្រមានកុំឱ្យមើលឃើញ AI ថាជាដំណោះស្រាយស្វយ័ត និងមិនមានកំហុស។ ពួកគាត់បានរំឭកពួកយើងថា AI គ្រាន់តែជាឧបករណ៍មួយប៉ុណ្ណោះ។ AI អាចត្រូវបានប្រើប្រាស់សម្រាប់ទាំងក្នុងផ្លូវល្អនិងអាក្រក់។ ការពិតនេះគូសបញ្ជាក់ពីមូលហេតុដែលការត្រួតពិនិត្យរបស់មនុស្សមានសារៈសំខាន់ ហើយត្រូវតែនៅតែជាចំណុចកណ្តាលនៃយុទ្ធសាស្ត្រអនុវត្តបញ្ញាសិប្បនិម្មិតណាមួយ។ ដូច្នេះ ការគ្រប់គ្រងបញ្ញាសិប្បនិម្មិតប្រកបដោយសីលធម៌មិនអាចគ្រាន់តែជាសំណុំនៃច្បាប់ដែលអនុវត្តចំពោះកូដ (Code) នោះទេ។ វាត្រូវតែដើរទន្ទឹមគ្នាជាមួយនឹងការបណ្តុះបណ្តាលឌីជីថលដ៏ទូលំទូលាយនិងត្រឹមត្រូវសម្រាប់អ្នកប្រើប្រាស់។

មិនថាប្រព័ន្ធ AI ត្រូវបានគ្រប់គ្រងបានល្អប៉ុណ្ណានោះទេ ប្រតិបត្តិករ អ្នកធ្វើការសម្រេចចិត្ត និងអ្នកប្រើប្រាស់ត្រូវតែយល់ពីសមត្ថភាព ដែនកំណត់ និងរបាំងសីលធម៌របស់ឧបករណ៍នេះ។ បើគ្មានស្រទាប់ត្រួតពិនិត្យ និងអន្តរាគមន៍របស់មនុស្សដែលមានការអប់រំនេះទេ ក្របខណ្ឌគ្រប់គ្រងណាមួយនឹងប្រឈមនឹងហានិភ័យនៃការចុះខ្សោយដោយកំហុសរបស់អ្នកប្រើប្រាស់ ការប្រើប្រាស់ខុសដោយចេតនា ឬការខ្វះការយល់ដឹង។ គុណភាពនៃកត្តាមនុស្សកំណត់ដោយផ្ទាល់នូវគុណភាពនៃផលប៉ះពាល់របស់ AI។ ប្រធានបទស្នូលនៃការពិភាក្សាគឺភាពចាំបាច់នៃការពង្រីកវិសាលភាពនៃនិយមន័យនៃពាក្យ “បរិយាបន្ន” ដែលមិនមែនគ្រាន់តែផ្តោតលើតុល្យភាពយេនឌ័រប៉ុណ្ណោះទេ។

វាគ្មិនបានសង្កត់ធ្ងន់ថា ខណៈដែលយេនឌ័រជាកត្តាសំខាន់មួយដែលត្រូវពិចារណានៅពេលបណ្តុះបណ្តាល AI វាមិនមែនជាកត្តាតែមួយគត់ដែលជំរុញឱ្យមានភាពលំអៀង ឬវិសមភាពនោះទេ។ ដើម្បីកសាងប្រព័ន្ធសីលធម៌ និងយុត្តិធម៌ពិតប្រាកដ អ្នកអភិវឌ្ឍ AI និងអ្នកធ្វើគោលនយោបាយត្រូវតែផ្តល់អាទិភាពដល់ទិន្នន័យដែលចាប់យកវិសាលគមពេញលេញនៃភាពចម្រុះរបស់មនុស្ស។

វិធីសាស្ត្រដ៏ទូលំទូលាយនេះតម្រូវឱ្យយកចិត្តទុកដាក់ចំពោះទិន្នន័យដែលឆ្លុះបញ្ចាំងពីសាវតារសង្គមផ្សេងៗគ្នា ឋានៈសេដ្ឋកិច្ចសង្គម និងជាសំខាន់គឺទិន្នន័យសម្រាប់មនុស្សដែលមានអក្ខរកម្មឌីជីថលទាប។ នៅពេលដែលសំណុំទិន្នន័យមានភាពលំអៀងខ្លាំង ពោលគឺតំណាងច្រើនលើលលុបដោយតែប្រជាជនដែលមានជីវភាពធូរធារ មានបច្ចេកវិទ្យាឌីជីថលទំនើប ឬរស់នៅទីក្រុង នោះលទ្ធផលនៃគំរូ AI នឹងបង្ហាញពីភាពលំអៀងប្រឆាំងនឹងអ្នកដែលត្រូវបានគេដាក់ឱ្យនៅដាច់ដោយឡែក ឬត្រូវបានដកចេញពីប្រព័ន្ធឌីជីថល។

ភាពលំអៀងនេះមានភាពប្រថុយប្រថាននឹងការចម្លង និងថែមទាំងពង្រីកវិសមភាពក្នុងពិភពពិតនៅក្នុងវិស័យឌីជីថល។ វាគ្មិនបានលើកឡើងនូវករណីដ៏មានឥទ្ធិពលមួយថា ការទទួលស្គាល់លក្ខណៈចម្រុះនៃបទពិសោធន៍របស់មនុស្សចាប់ពីឋានៈសេដ្ឋកិច្ចរហូតដល់ការចូលប្រើឌីជីថល គឺមានសារៈសំខាន់ដូចគ្នាសម្រាប់ការសម្រេចបាននូវអនាគតប្រកបដោយចីរភាព និងយុត្តិធម៌សម្រាប់ AI។

បញ្ហាប្រឈមចម្បងដែលកំណត់ដោយការពិភាក្សា គឺគម្លាតដ៏ធំរវាងល្បឿននៃការច្នៃប្រឌិត AI និងល្បឿននៃការបង្កើតអភិបាលកិច្ច។ បច្ចេកវិទ្យាច្រើនតែអភិវឌ្ឍក្នុងអត្រាអិចស្ប៉ូណង់ស្យែល ខណៈពេលដែលស្ថាប័ននិយតកម្ម ច្បាប់ និងក្របខណ្ឌសីលធម៌អភិវឌ្ឍក្នុងលឿនយឺតជាង និងជាលីនេអ៊ែរ។ វាច្បាស់ណាស់ដោយសារតែការច្នៃប្រឌិត AI អភិវឌ្ឍលឿនជាងអភិបាលកិច្ច ទើបវិធីសាស្ត្រអកម្មមិនអាចទទួលយកបាន។

ការពិភាក្សាបានសន្និដ្ឋានថា វាមានសារៈសំខាន់ណាស់សម្រាប់យើងក្នុងការមើលឃើញជាមុនអំពីហានិភ័យនៃបញ្ញាសិប្បនិម្មិត (AI) និងបង្កើតយន្តការបង្ការជាមុន។ នេះពាក់ព័ន្ធនឹងការបង្កើតគោលនយោបាយថាមវន្ត និងមានទស្សនវិស័យទៅមុខ ដែលត្រូវបានបង្កើតឡើងជុំវិញគោលការណ៍ជាមូលដ្ឋាននៃការរួមបញ្ចូលគ្នាតាំងចំណុចចាប់ផ្តើមដំបូង។

ជំនួសឱ្យការរង់ចាំឱ្យទម្រង់ថ្មីនៃភាពលំអៀង ឬគ្រោះថ្នាក់លេចឡើងមុនពេលបង្កើតច្បាប់ អ្នកធ្វើគោលនយោបាយ និងអ្នកអភិវឌ្ឍន៍ត្រូវតែគិតទុកជាមុនពីផលវិបាកជាអវិជ្ជមានដែលអាចកើតមានឡើងទៅលើ ក្រុមមនុស្សផ្សេងៗគ្នា និងបញ្ចូលការការពារសីលធម៌ទៅក្នុងវដ្តនៃការអភិវឌ្ឍ។ យុទ្ធសាស្ត្របង្ការ ការរួមបញ្ចូល និងតម្រង់ទិសទៅអនាគតនេះ គឺជាមធ្យោបាយតែមួយគត់ដើម្បីធានាថា AI បម្រើមនុស្សជាតិយ៉ាងទូលំទូលាយ និងប្រកបដោយយុត្តិធម៌។