សារៈសំខាន់នៃការរួមបញ្ចូលយេនឌ័រក្នុង AI៖ ការភ្ជាប់គម្លាតឌីជីថល

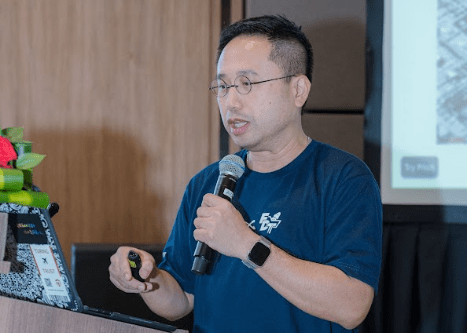

បទបង្ហាញដំបូងរបស់នៃកម្មវិធីរបស់យើងដែលធ្វើឡើងដោយលោក Dixon Siu បានផ្តោតលើគោលគំនិតជាមូលដ្ឋាននៃ AI និងសារៈសំខាន់បំផុតនៃការរួមបញ្ចូលយេនឌ័រក្នុងការអភិវឌ្ឍ និងការដាក់ពង្រាយរបស់វា។ បទបង្ហាញនេះបានរួមបញ្ចូលទាំងការណែនាំបច្ចេកទេសអំពីសមត្ថភាព AI ទំនើប និងការព្រមានច្បាស់ៗអំពីហានិភ័យនៃអនាគតឌីជីថលប្រសិនបើមិនរាប់បញ្ចូលពេញលេញ។ បទបង្ហាញរបស់លោកបានដោយបញ្ចប់ដោយយុទ្ធសាស្ត្រដែលអាចអនុវត្តបានសម្រាប់ការលើកកម្ពស់បច្ចេកវិទ្យាសីលធម៌ និងសមធម៌។

លោក Siu បានចាប់ផ្តើមការបង្ហាញរបស់លោក ដោយការបង្កើតគំនិតបច្ចេកទេសជាមូលដ្ឋានយ៉ាងល្អិតល្អន់ ដោយចាប់ផ្តើមជាមួយនឹងនិយមន័យនៃ AI ម៉ាស៊ីនសិក្សា (ML: Machine Learning) និងការរៀនស៊ីជម្រៅ (DL: Deep Learning)។ លោកបានពន្យល់ថា DL ដែលជាសំណុំរងដ៏ស្មុគស្មាញនៃ ML គឺជាបច្ចេកវិទ្យាចម្បងដែលអនុញ្ញាតឱ្យ AI ទំនើបមានជំនាញយល់ដឹងស្មុគស្មាញ ដោយធ្វើត្រាប់តាមសមត្ថភាពរៀនសូត្រ និងដំណើរការដ៏ស្មុគស្មាញនៃខួរក្បាលមនុស្ស។ ពីមូលដ្ឋានបច្ចេកទេសនេះ លោកបានណែនាំគំរូភាសាធំ (LLM: Large Language Models) ដូចជាគំរូដែលផ្តល់ថាមពលដល់ឧបករណ៍ AI ដូចជា ChatGPT និង Gemini ជាការបង្ហាញឱ្យឃើញនិងទទួលយកយ៉ាងទូលំទូលាយបំផុតមួយនៃសមត្ថភាពកម្រិតខ្ពស់ទាំងនេះ។

ការយល់ដឹងអំពីមូលដ្ឋានគ្រឹះបច្ចេកទេសទាំងនេះ គឺមានសារៈសំខាន់សម្រាប់ការពិភាក្សាអំពីផលប៉ះពាល់សង្គមយ៉ាងជ្រាលជ្រៅរបស់ឧបករណ៍ដំណើរការដោយ AI ។ ខ្លឹមសារសំខាន់នៃបទបង្ហាញនេះគឺជាការពន្យល់អំពីមូលហេតុដែលការរួមបញ្ចូលយេនឌ័រមានសារៈសំខាន់ក្នុងការបណ្តុះបណ្តាល AI៖ “គោលការណ៍គឺសាមញ្ញ និងមិនអាចជៀសវាងបាន - AI គឺយុត្តិធម៌លុះត្រាតែទិន្នន័យ និងការសន្មត់ដែលយើងដាក់ចូលទៅក្នុងវា”។

បទបង្ហាញបានបន្តផ្តល់រូបភាពដ៏គួរឱ្យព្រួយបារម្ភមួយអំពីស្ថានភាពបច្ចុប្បន្ននៃប្រព័ន្ធអេកូឡូស៊ីបច្ចេកវិទ្យា ដោយសង្កត់ធ្ងន់ថា មានគម្លាតយេនឌ័រខ្លាំងពេកក្នុងវិស័យបច្ចេកវិទ្យា ហើយបើសិនអនុញ្ញាតឱ្យរឿងនេះនៅតែបន្តកើតឡើង “យើងកំពុងកសាងអនាគតមួយដែលទុកមនុស្សយ៉ាងច្រើនចោល”។ ភាពខុសគ្នានេះគឺគួរឱ្យខកចិត្ត ជាពិសេសពីព្រោះវាមិនមែនដោយសារតែខ្វះសមត្ថភាពនោះទេ។ គម្លាតនេះនៅតែបន្ត ទោះបីជាអត្រាបញ្ចប់ការសិក្សាថ្នាក់ឧត្តមសិក្សារបស់ស្ត្រីមានការកើនឡើងក៏ដោយ ដែលបង្ហាញពីការដាច់ទំនាក់ទំនងយ៉ាងជ្រាលជ្រៅរវាងសមិទ្ធផលអប់រំ និងការរីកចម្រើនជាក់ស្តែងទៅក្នុងតួនាទី AI ឬនវានុវត្តន៍ឌីជីថល។

នៅក្នុងសេចក្តីសង្ខេបខ្លីមួយ លោក Siu បានកត់សម្គាល់ថា ខណៈពេលដែលគម្លាតយេនឌ័រក្នុងAI កំពុងថយចុះយឺតៗ ឧបសគ្គជាប្រព័ន្ធដូចជា ភាពមិនស្មើគ្នាក្នុងការទទួលបានចំពោះការបង្កើនជំនាញAI ភាពលំអៀងយ៉ាងជ្រៅក្នុងការជួលបុគ្គលិក និងកង្វះភាពជាតំណាងក្នុងភាពជាអ្នកដឹកនាំ នៅតែបន្តដាក់កំហិតយ៉ាងខ្លាំងដល់ការចូលរួមពេញលេញ និងស្មើភាពគ្នារបស់ស្ត្រីនៅក្នុងឧស្សាហកម្ម AI។

ដើម្បីបង្ហាញយ៉ាងច្បាស់អំពីផលប៉ះពាល់ជាក់ស្តែងនៃការដកចេញជាប្រព័ន្ធនេះ ទស្សនិកជនត្រូវបានមើលការបង្ហាញដោយផ្ទាល់នៃឧបករណ៍AI បង្កើតមាតិកាដូចជា ChatGPT និង Gemini។ លទ្ធផលភ្លាមៗគួរឱ្យព្រួយបារម្ភ ដែលបង្ហាញពីរបៀបដែលរឿងរ៉ាវឬសេណារីយ៉ូត្រូវបានបង្កើត ដោយហេតុថា LLM ទាំងនេះនៅតែបន្តពង្រឹងតួនាទីយេនឌ័រ សម្រាប់បុរស និងស្ត្រីតាមផ្នត់គំនិតបែបប្រពៃណី។ នេះបានបញ្ជាក់ពីបញ្ហាស្នូលដែល AI រៀនពីវិសមភាពនៅក្នុងប្រវត្តិសាស្ត្រ ហើយឆ្លុះបញ្ចាំងភាពលំអៀងនៃសង្គមពីអតីតកាលទៅក្នុងដំណោះស្រាយនៃពេលអនាគត។

បទបង្ហាញបានបង្ហាញថា ភាពលំអៀងនេះបង្កើតជាវដ្តដ៏កាចសាហាវមួយដែលភាពមិនស្មើគ្នានៃទិន្នន័យតំណាងចាប់តាំងពីចំណុចចាប់ផ្តើមបង្កឱ្យមានភាពលំអៀងនៅក្នុងលទ្ធផលបង្កើតដោយAI ហើយវាបណ្តាលឱ្យមានកង្វះខាត ទិន្នន័យតំណាងកាន់តែខ្លាំងឡើងបន្ថែមទៀត ដោយសារតែក្រុមដែលត្រូវគេរើសអើង បន្តទទួលរងនូវការមិនអើពើ មិនមានសម្លេង ឬរងគ្រោះថ្នាក់ដោយបច្ចេកវិទ្យា។ លោក Siu បានបញ្ជាក់បន្ថែមទៀតថា បញ្ហានេះលាតសន្ធឹងលើសពីប្រធានបទយ៉េនឌ័រទៅទៀត។ លោកបានបង្ហាញថា AI ក៏មានការតំណាងមិនស៊ីសង្វាក់គ្នាលើកត្តាដូចជាពណ៌សម្បុរ និងជនជាតិផងដែរ។ នេះមានន័យថា ការរើសអើងក្នុងជីវិតពិតត្រូវបានចម្លង និងពង្រីកដល់ក្នុងវិស័យឌីជីថល ដែលបង្កើតភាពអយុត្តិធម៌ដែលកើនឡើងជាជាងថយចុះពីមួយថ្ងៃទៅមួយថ្ងៃ។

គ្រោះថ្នាក់ជាមូលដ្ឋានស្ថិតនៅក្នុងវដ្តនៃការផ្តល់មតិត្រឡប់ (Feedback Loop) របស់ AI ជាកន្លែងដែលភាពលំអៀងកំពុងចិញ្ចឹមខ្លួនឯង និងពង្រឹងផ្នត់គំនិតដ៏គ្រោះថ្នាក់ទាំងនេះ។ បញ្ហាជាប្រព័ន្ធនេះទាមទារការយកចិត្តទុកដាក់ជាបន្ទាន់ ពីព្រោះ AI ដែលលំអៀងស្មើនឹងសង្គមដែលលំអៀង ដែលបង្កការគំរាមកំហែងដោយផ្ទាល់ និងអត្ថិភាពចំពោះការសម្រេចបានសមធម៌ និងយុត្តិធម៌ក្នុងសង្គមនាពេលអនាគត។

បន្ទាប់មក បទបង្ហាញបានផ្លាស់ប្តូរពីការកំណត់បញ្ហាទៅជាដំណោះស្រាយដែលអាចអនុវត្តបាន ដោយគូសបញ្ជាក់ពីជំហានសំខាន់ៗជាច្រើនដែលត្រូវការ ដើម្បីបំបែកវដ្តនេះនិងកែលម្អការរួមបញ្ចូល ព្រមទាំងក្រមសីលធម៌ AI។ លោកបានណែនាំថា ទាំងនេះមិនមែនជាសីលធម៌បន្ថែមសីលធម៌ដែលមានក៏បានមិនមានក៏បាននោះទេ ប៉ុន្តែជាគោលការណ៍អភិវឌ្ឍន៍សំខាន់ៗ។ វិធីសំខាន់ៗដើម្បីកែលម្អការរួមបញ្ចូលយេនឌ័រ AI រួមមាន៖

- ការអនុវត្តភាពចម្រុះទិន្នន័យ និងពិធីសារឯកសារយ៉ាងម៉ត់ចត់ ដើម្បីធានាថាសំណុំទិន្នន័យសម្រាប់បង្រៀន AI មានលក្ខណៈតំណាងជាសកល

- ការអនុម័តការធ្វើតេស្តនិងសវនកម្មភាពលំអៀងជាបន្តបន្ទាប់ ដើម្បីស្វែងរកនិងកាត់បន្ថយចំណុចខ្វះខាតនៃវិធីសាស្ត្រដោះស្រាយបញ្ញារបស់ AI ប្រកបដោយភាពបុរេសកម្ម

- ការផ្តល់អាទិភាពដល់ភាពអាចពន្យល់បាន និងតម្លាភាព ដើម្បីឱ្យដំណើរការធ្វើការសម្រេចចិត្តរបស់ AI មានភាពងាយស្រួលសម្រាប់អ្នកកំណត់បទប្បញ្ញត្តិ និងអ្នកប្រើប្រាស់

- ការបង្កើតក្របខណ្ឌសីលធម៌ និងអភិបាលកិច្ចដ៏រឹងមាំ កំណត់គណនេយ្យភាព

- និងការរួមបញ្ចូលយន្តការត្រួតពិនិត្យ និងមតិកែលម្អជាបន្តបន្ទាប់ សម្រាប់ធ្វើការកែតម្រូវស្របតាមស្ថានភាព និងបទពិសោធន៍ជាក់ស្តែងនៅក្នុងពិភពពិត។

ដោយលើកឡើងពីចំណុចនេះ លោក Siu បានសន្និដ្ឋានថា វាមិនមែនគ្រាន់តែជាតម្រូវការសីលធម៌ប៉ុណ្ណោះទេ ប៉ុន្តែវាក៏ជាតម្រូវការយុទ្ធសាស្ត្រមួយផងដែរ។ លោកបានបញ្ចប់ដោយកត់សម្គាល់យ៉ាងមុតមាំថា ការរួមបញ្ចូលមិនត្រឹមតែជាវិធីសាស្ត្រល្អនិងត្រឹមត្រូវប៉ុណ្ណោះទេ ថែមទាំងជាអាជីវកម្មល្អផងដែរ ដោយលោកបានលើកឡើងថា ក្រុមការងារចម្រុះនិងផលិតផលដែលមានរួមបញ្ចូលគឺមានគុណតម្លៃខ្ពស់ជាង ដែលនាំទៅរកការច្នៃប្រឌិតកាន់តែច្រើន ការទទួលយកទីផ្សារកាន់តែទូលំទូលាយ និងទីបំផុតវិស័យបច្ចេកវិទ្យាកាន់តែមាននិរន្តរភាពនិងទទួលបានជោគជ័យ។